Artificial intelligence is sweeping the Department of Defense, and in search of new ways to support the warfighter on the ever-changing modern battlefield, the department is now heavily focused on perfecting this emerging technology.

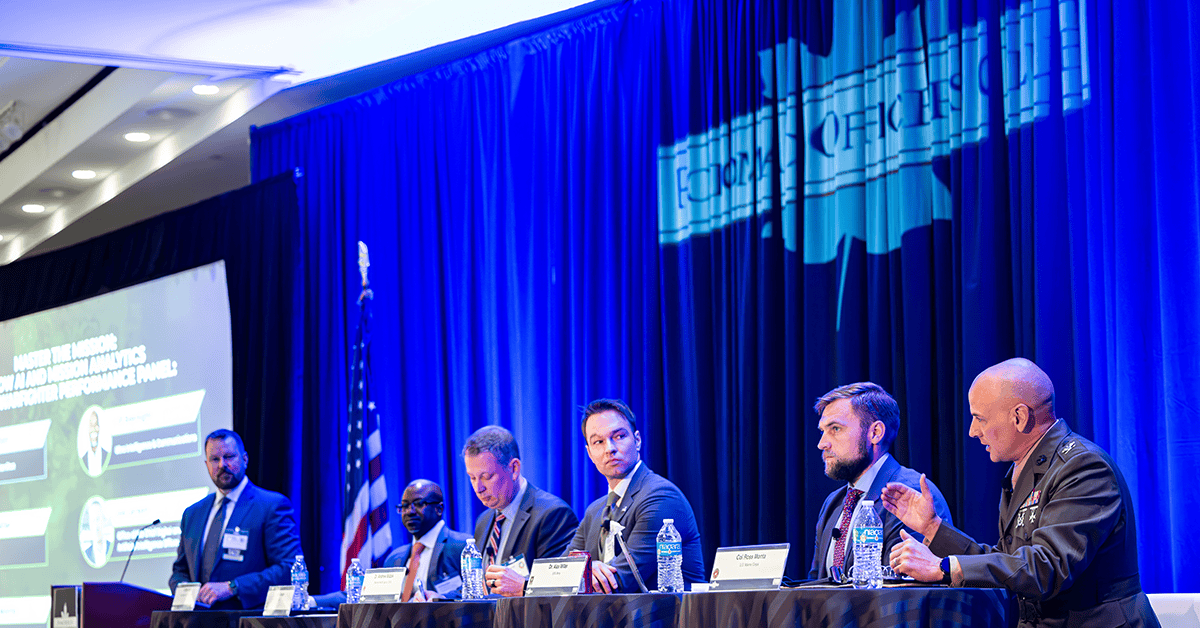

Ensuring that AI implementation aligns with DOD values and objectives is an effort that requires careful attention, according to experts in the field, who came together for a panel discussion at the Potomac Officers Club’s 8th Annual Army Summit last week.

“If we are going to govern based on principles, those principles have to be informed and they have to be correct,” emphasized Joe Larson, deputy chief digital and artificial intelligence officer for algorithmic warfare at CDAO.

He said that this sort of governance is a “significant challenge” due to its implication that “artificial intelligence is a monolithic technology from which one set of regulations can apply against the range of use cases and workflows and problems that it addresses,” which is often not true.

In the “ungoverned space” of AI, there must be a balance between what the technology actually is and how it can boost a person’s ability to do their job, according to Dr. Alex Miller, senior advisor for S&T for the vice chief of staff at the U.S. Army.

“When warfighters have a mission, what we’re really talking about is how we augment that. How do we apply the rules, the norms, the standards and the cultural aspect of what it is to be an American war fighter or a coalition war fighter, and how do we apply the technology?” Miller said.

“Technology is not the end, it’s a means,” he stressed.

The U.S. Marine Corps shares this perspective. Col. Ross Monta, portfolio manager for command element systems in the USMC Systems Command, emphasized that the service branch is “not going to replace a Marine’s decision space, that commander’s decision space.”

“[AI] is to give him better, more enriched, more reliable and more accurate information faster to create that OODA loop of decision making to outpace our enemy,” he said.

Offering an industry viewpoint, Clif Basnight, vice president of strategic technologies at Ultra Intelligence & Communications, said that building AI with ethical principles in mind is the way to establish operator trust in these systems.

“If we are not adhering to those principles, it’s going to be very hard for them to trust that we are giving them the capability that they need,” he said.

Infrastructure is a key component in ensuring that AI is implemented successfully. Larson said that looking at new AI technologies without considering its foundation can cause the warfighter to view AI as an all-encompassing solution for any problem.

“We don’t want commanders being so excited about AI that they drive their staff to employ it in ways that don’t align with the ethical principles,” he said.

Basnight added that even the data itself can be viewed as infrastructure.

“Without this data, we’re never going to train these algorithms to do the thing that you need to do,” he emphasized.

Dr. Andrew Midzak, strategic research program integrator for the Military Operational Medicine Research Program at the Defense Health Agency, noted the small “reservoir of material” for AI. He said that in certain fields, there is a wealth of data to be analyzed, but in the military space, there is currently a lack of relevant training data that can be used across agencies.

“Go to any unit, and you will find a number of early adopters of [AI tools]. But the systematization and scalability of these is currently a significant gap,” he said.

Though caution is important, the panelists said that the DOD has made several steps forward in AI implementation.

Larson referenced CDAO’s Global Information Dominance Experiments program, which tests AI technologies and assesses their potential uses in multi-domain operations. He said that the initiative is carried out with foundational issues, such as infrastructure and processes, in mind.

Midzak said that in the medical space, many experts see potential for AI use in radiology.

“If people are building a common infrastructure and understand and are able to share data, the ability to integrate and make things interoperable emerges from that,” said Larson.

Don’t miss the Potomac Officers Club’s next summit: the 9th Annual Intel Summit! At the event, experts in the field will come together to discuss the Intelligence Community’s most important challenges and priorities as threats continue to evolve. To learn more and register to attend, click here.