Artificial intelligence has seen a meteoric rise across the public and private sectors alike as new advancements, policies and opportunities fuel continued growth and interest for the promising technology. However, AI is not without risk, and government leaders are weighing risk against potential benefits as they seek to adopt it.

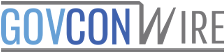

Dr. Kathleen Fisher, director of the Information Innovation Office at the Defense Advanced Research Project Agency, suggested that some AI tools — especially generative AI — may need more time and research to mature before they can safely be used and trusted in government and military applications.

“The fact that transformer-based models generalize or hallucinate in these weird ways is a really big problem,” Fisher said at the Potomac Officers Club’s 5th Annual AI Summit in March. “Not necessarily a big problem for certain kinds of applications, but for national security applications where we really, really, really need to trust what the models are saying, it can be a really big problem. So far we haven’t found a way to control these models in a long term kind of way. ”

Fisher pointed to generative AI models like OpenAI’s Sora as an example of how far AI innovation has come, and simultaneously, how much work there still is to be done in order for it to be trusted. For Fisher, the solution to mastering AI technology and achieving dominance in the space is to lean in.

“We need to — this is my personal opinion — we need to lean in and figure out what is the current state of AI good for from a national security perspective? How do we use it? And how do we work around the limitations?” she posed.

For example, AI could be helpful in drafting routine reports and summarizing vast amounts of information, saving employees a tremendous amount of time and freeing them up for higher cognitive tasks. But federal officials need to not just look at the potential benefits — they also need to be playing defense and figuring out how AI could be used against us. Fisher noted that both viewpoints and approaches are necessary for driving AI innovation, but ultimately, we just need to start using it.

“If we don’t figure out how to use these models for good and leverage their possibilities now, we risk falling way behind the curve and having adversaries figure out how to do it or just never being able to do this,” said Fisher. “On the other side, we need to figure out what the threats are for national security and being able to defend against those threats. And these are related to each other. If we don’t start using it, we won’t really understand what the threats are. We need to dig in and do both at the same time.”

DARPA currently has a number of AI programs in the works — and Fisher noted that nearly 70 percent of DARPA programs involve AI in some way. One such program is the Semantic Forensics effort, known as SemaFor, which detects manipulated media. Fisher said this program was able to detect manipulated radiology images with high confidence and was actually transitioned to the Department of Homeland Security.

Another DARPA AI program aims to understand both what is possible with adversarial AI and how to defend against it. The Guaranteeing AI Robustness Against Deception, or GARD, program has funded attacks on large language models for research purposes over the past year. GARD researchers were able to develop a new class of adversarial attacks that could break safety guardrails in LLMs with a nearly perfect success rate on open-source models, an 87 percent success rate with proprietary GPT-3.5 and 50 percent with GPT-4.

But in today’s rapidly changing AI landscape, more research — and the speed of that research — is critical. Fisher urged that now is not the time to slow down or avoid the issues that come with AI.

“We’re living in interesting times with respect to AI. And those interesting times mean that we both have huge opportunities for national security, and we need to lean into those interesting times and take advantage of those interesting times. It’s not a time to put our head in the sand and say we’re just going to wait for somebody else to go perfect AI,” she warned.

Don’t miss the Potomac Officers Club’s next event, the 5th Annual CIO Summit on April 17! Join to hear important insights from the nation’s top CIOs in government and industry. Register here.